Kubernetes Load Balancer – On-Premises & Bare Metal

Everyone knows that using a Kubernetes Load Balancer is a challenge. Back in the days when I was responsible for network operations, the load balancer management was under my team’s responsibility too. Every time Linux/Unix OPS were adding or moving application servers–they had to open a ticket to the network team and include the list of backend addresses, TCP ports, and description of health checks to get a big network load balancer configured. Network engineers and Linux engineers, all humans, were making mistakes sometimes and then would collaborate to get things fixed, keeping production operational.

With all the back and forth, it was commonly taking days to fulfill the average load balancer request.

Back then, it was the norm. Now in the age of the cloud, the norm is on-demand load balancer service available immediately and seamlessly working with Kubernetes. While it makes it easier for DevOps engineers it is not all that easy to architect and organize that cool load balancer as a service experience for an on-prem private cloud.

What user experience are cloud practitioners expecting from Layer-4 load balancers?

Public cloud providers offer a great deal of convenience by automatically provisioning and configuring the services needed by the application.

All major public cloud providers offer on-demand load balancer services that you use for exposing your Kubernetes applications to the public Internet. Or you are using an Ingress controller and your Ingress controller is using the on-demand load balancer for exposing it’s public TCP port towards the Internet.

How can we get this cloud-like experience in a private cloud, on-prem?

A modern Layer-4 Load Balancer (L4LB) minimal expectations:

- Native integration with Kubernetes

- Immediate, on-demand provisioning

- Manageable using: K8s CRDs, Terraform, RestAPI, intuitive GUI

- High Availability

- Horizontally scalable

- TCP/HTTP health checks

- Easy to install & use (L4LB is not rocket science)

A modern Layer-4 Load Balancer (L4LB) nice-to-have expectations:

- Run on commodity hardware

- DPDK / SmartNIC HW acceleration support

- Based on well known open-source ecosystem & standards protocols (no proprietary black box things)

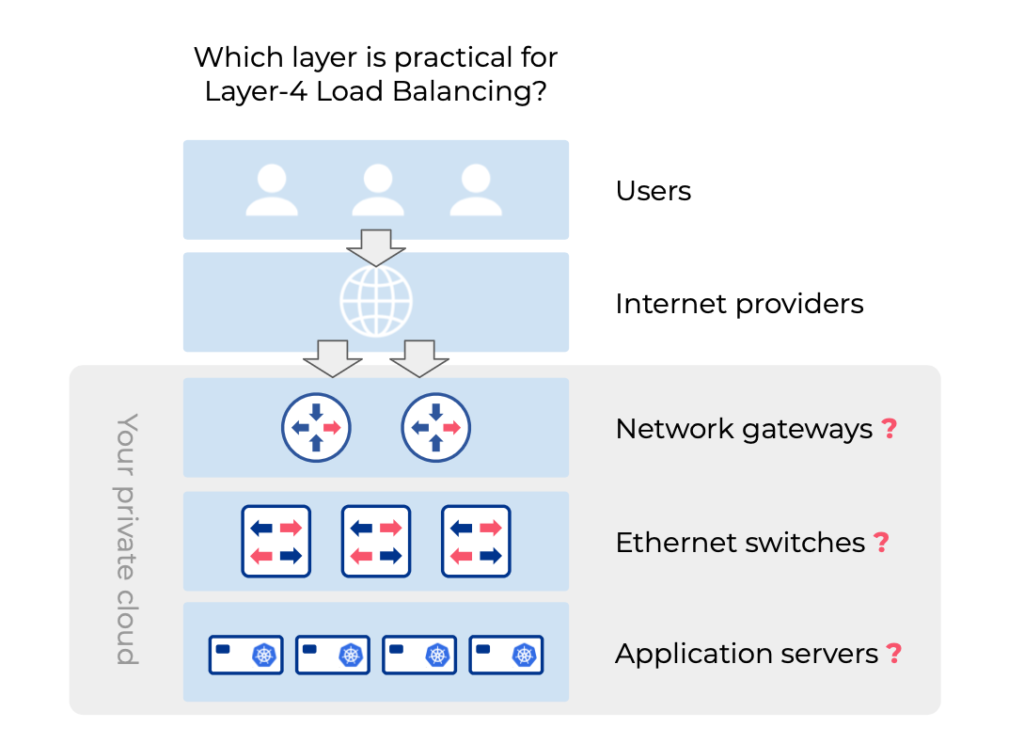

Where is the most practical place for the Layer-4 load balancer function in my architecture?

Ethernet switches can load balance traffic. But is this suitable for Kubernetes?

The main job of ethernet switches is to provide the highest performance communication between application servers in your private cloud. Modern ethernet switches are capable of load balancing network traffic based on hashing algorithms using IP address and TCP/UDP port numbers. This load balancing technique is commonly used for Layer-2 (ex. LAG interface balancing) and Layer-3 (ex. ECMP across switch fabric). ECMP-based Layer-3 load balancing can also be used for server traffic load balancing, commonly used in ROH (routing-to-host) high-performance content delivery architectures. The upside of this method is ultra high performance, but the downside is that there’s no way to distinguish between different TCP ports. Therefore every application and every TCP port will require a dedicated public IP address, effectively wasting this precious resource.

BOTTOM LINE: Ethernet switches are suitable for Layer-3 load balancing but NOT for Layer-4, thus not a fit for a Kubernetes Load Balancer (service of type LoadBalancer).

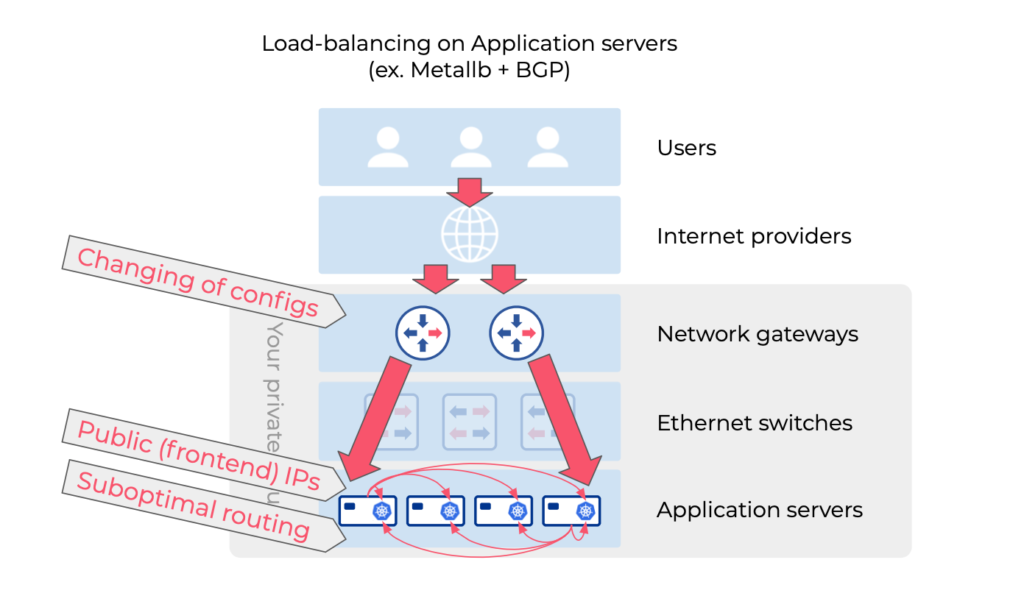

Is it practical to run a Layer-4 load balancer right on the application servers?

There are multiple applications for running the Layer-4 load balancing on Kubernetes nodes, including Metallb, KubeVIP, or just using nodeport with kubeproxy or Cilium. These methods are fairly simple and easy to implement on the Kubernetes side. The challenge is: How to route traffic from the network gateway into these kubernetes load balancers. If we route traffic statically, that means that all incoming requests travelling to any container at any node will be forced to enter the kubernetes cluster using just a single node. This potentially creates a bottleneck as well as high availability issues.

A better approach is to use a dynamic routing protocol (ex. BGP with Metallb) which solves the high availability issue. In this scenario the frontend public IP address is living on every kubernetes node (anycast) and the physical network (your switches or your routers) is Layer-3 load balancing the traffic towards all kubernetes nodes that are running Metallb. Each kubernetes node will need to perform a Layer-4 lookup to check if the destination application is running locally on the current node. But in most cases it has to perform an additional forwarding operation to send the traffic (transit the physical network) to the kubernetes node that has the destination container running. Thus leading to suboptimal routing.

In addition, when you use BGP for load-balancer in such a scenario, it conflicts with using BGP for anything else. A common example is a conflict with Calico CNI.

References:

https://metallb.universe.tf/configuration/calico/#workaround-peer-with-spine-routers

https://docs.projectcalico.org/networking/bgp

BOTTOM LINE: Application servers are not the best place to perform load balancing.

- Potentially suboptimal routing

- Hosting a public IP address on kubernetes node (security)

- Requires changing of BGP configurations on the routers/switches every time you add/move/remove a kubernetes node.

- Conflicting with Calico CNI

Why are network gateways great places for Layer-4 load balancing functions?

Every private network needs to have some kind of network gateway. It can be a Linux router, a cheap appliance, or an expensive specialized performant router. Every packet that enters or leaves the private cloud from outside is traveling through the routing decision making steps of the network gateway. The network gateway is going to rewrite the packet header in any case for the sake of routing. Adding an additional function of Layer-4 load balancing here does not change the architecture of your network, and the imposed overhead on the gateway is tiny. So the network gateway is almost like the organic place for the Layer-4 load balancing function.

What are the options (suitable for Kubernetes)?

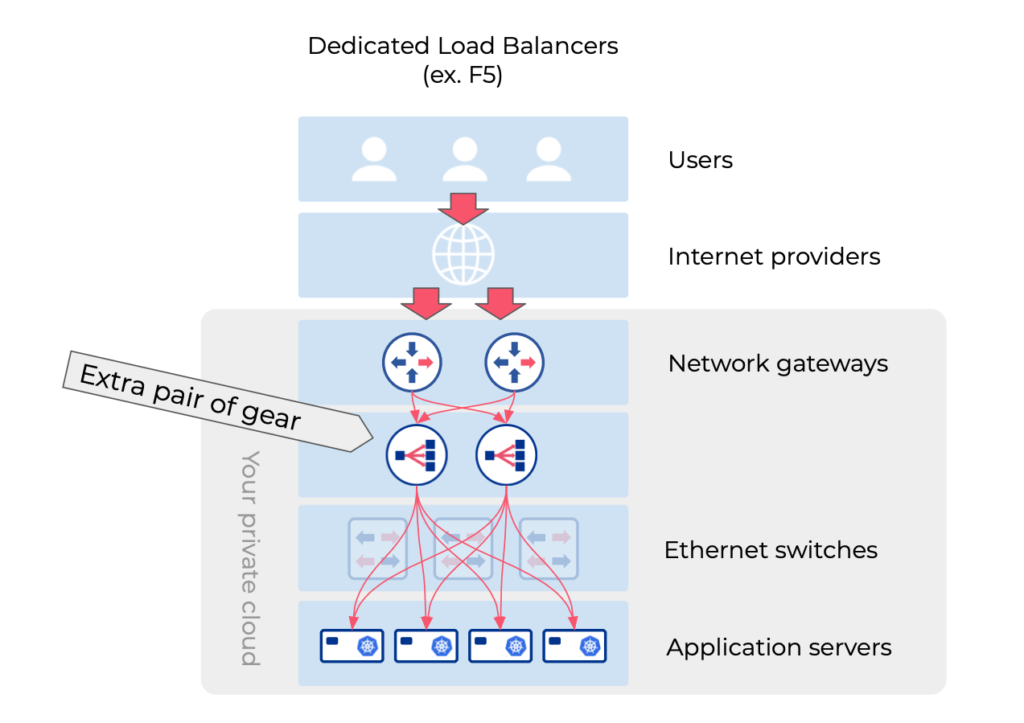

Is it overkill to use specialized load balancer appliances (like F5) for a Layer-4 k8s Load Balancer?

Some of the modern load balancer appliances provide integration with Kubernetes. Specialized load balancers provide many different types of sophisticated and expensive load balancing services, and Layer-4 is just a small fraction of it. If your goal is just to expose Kubernetes applications and/or route the traffic into Kubernetes, a specialized load balancer is overkill. Also most of the specialized load balancers are not designed for other essential gateway functions such as routing or NAT, thus they require having a dedicated router as a separate appliance. The opposite is also true. Most of the routers do not provide Layer-4 load balancing functions.

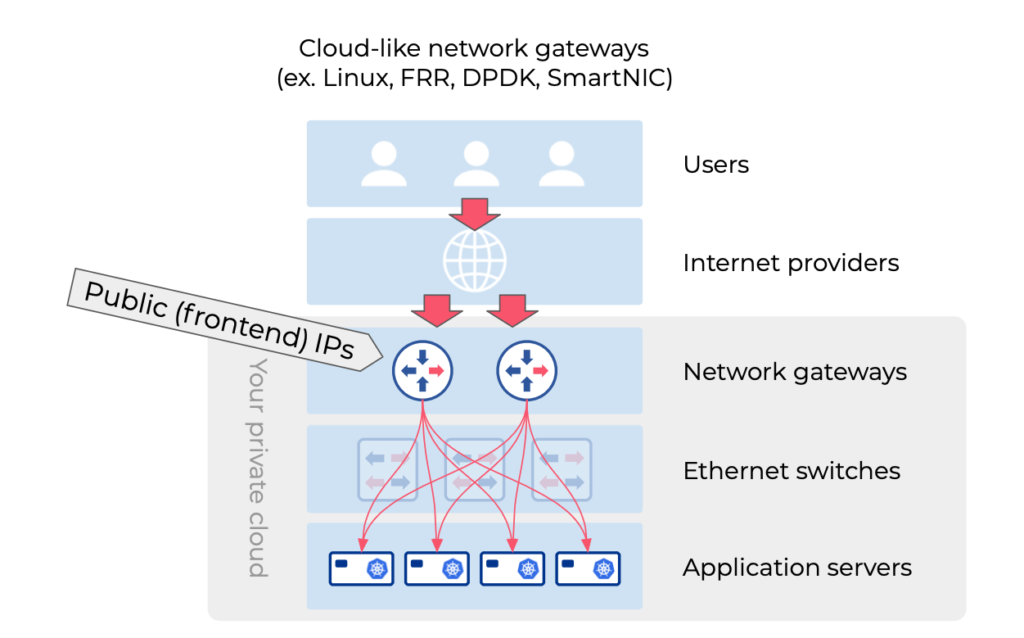

Practical, cloud-like network gateway with native Kubernetes integration

Linux machine + optionally DPDK / SmartNIC acceleration can make a great network gateway with Layer-4 load balancer and native integration with Kubernetes. Almost any common Linux distribution has the minimum building blocks i.e. Layer-2, Layer-3, Layer-4 network functionality.

- FRR (Free Range Routing) is an open-source routing protocol suite that has one of the most advanced implementations of BGP protocol, on par with traditional network routers.

- Nftables (just like iptables but better) has packet filter functionality to look into IP packet headers such as TCP/UDP ports and perform basic actions. I.e. Permit/deny certain flows, or rewrite IP packet headers for SNAT/DNAT traffic. SNAT (source network address translation) is an essential function for network gateway in order to let our private networks access resources on the public Internet. DNAT (destination network address translation) is the basic packet filter function that is necessary for the Layer-4 load balancer.

Netris SoftGate is software that automatically configures FRR, nftables, Linux bridges, and other aspects of Linux OS to provide the essential network services for your private cloud. Optionally there is DPDK-based network acceleration that can leverage SmartNIC hardware to provide routing and Layer-4 load balancing of up to 100Gbps traffic per node. You can use two nodes in automatic HA mode, or even horizontally scale them.

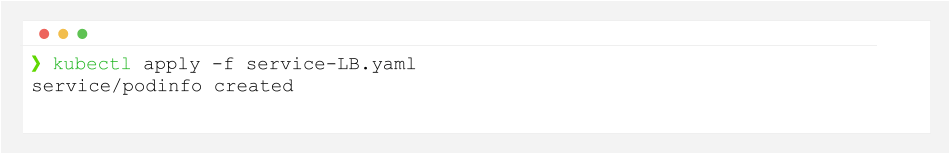

Netris Operator provides Kubernetes CRDs (custom resources definitions) for essential network services. Below is an example YAML for Layer-4 k8s load balancer service.

spec:

backend:

- 192.168.110.68:30590

- 192.168.110.69:30590

check:

timeout: 2000

type: tcp

frontend:

ip: 50.117.59.138

port: 80

ownerTenant: Admin

protocol: tcp

site: US/NYC

state: active

...Netris operator will push the YAML data into Netris controller, and then Netris SoftGate (your Linux-based network gateway) will automatically self-configure to provision whatever network services you request.

Netris Operator continuously monitors kube-api, and if you create a service of a type LoadBalancer, the appropriate Netris CRD will be automatically created. Then Netris controller will assign a public IP address from the IP pool marked to be used for k8s load balancing, and then will spin out the load balancers through Netris SoftGate.

kind: Service

metadata:

name: podinfo

spec:

type: LoadBalancer

selector:

app: podinfo

ports:

- name: http

port: 80

protocol: TCP

targetPort: http

...

Comparison: Load balancer options

| Netris | MetalLB | KubeVIP | Avi (VMware) | F5 | Citrix ADC | |

|---|---|---|---|---|---|---|

| K8s type:load-balancer | ■ |

■ |

■ |

■ |

■ |

■ |

| Respects externalTrafficPolicy | ■ |

■ |

– | ■ |

– | ■ |

| Expose default type of services (ClusterIP) external | ■ |

– | – | – | – | – |

| Frontend IP Address sharing | ■ |

– | – | ■ |

– | – |

| Immediate on-demand provisioning | ■ |

■ |

■ |

■ |

■ |

■ |

| Runs on the gateway | ■ |

– | – | – | – | – |

| Terraform Integration | ■ |

– | – | ■ |

■ |

■ |

| RestAPI | ■ |

– | – | ■ |

■ |

■ |

| Intuitive GUI | ■ |

– | – | ■ |

■ |

■ |

| SmartNIC HW Acceleration | ■ |

– | – | ■ |

– | ■ |

| XDP SW acceleration | ■ |

– | – | – | – | – |

| Optimal Routing | ■ |

– | – | ■ |

■ |

■ |

| Open standard protocols | ■ |

■ |

■ |

– | – | – |

| Generic TCP LB | ■ |

– | – | ■ |

■ |

■ |

| Generic UDP LB | ■ |

– | – | ■ |

■ |

■ |

| Auto-add Kubernetes nodes | ■ |

– | – | – | – | – |

| Runs on commodity hardware | ■ |

■ |

■ |

■ |

– | – |

| Free version w/ Slack community | ■ |

■ |

– | – | – | – |

| Paid enterprise support w/ SLAs | ■ |

– | – | ■ |

■ |

■ |

I’ve learned a lot from being a network ops practitioner myself. And I’ve learned a lot through the experience of our customers that are running mid to large scale private cloud infrastructures using Netris.

My goal here is to share my learnings with you, so it may be helpful whenever you need to design and build your next infrastructure or even a small home/office lab.

Meanwhile, suppose anyone is interested in Netris. In that case, you can play around using our sandbox environment or install the free version that is good for using anywhere between a small home/office lab up to a small 1-2 cabinet production.

Your feedback, comments, and questions about Netris and this particular article are highly appreciated.

Thanks for reading this far!